Formant gives robot companies the ability to observe, operate, and analyze robots at scale. We have a deep bench of roboticists and cloud engineers, but what we don’t have (and don’t want to have) is thousands of robots lying around waiting to be turned on. Robots are expensive and hard! Been there, done that. Our business is helping robot companies scale. We do this through a web observability interface that enables humans to manage field-deployed robots. Given the cost, complexity and non-standardization of robotic hardware, we realized that a “digital double” — a virtual robot proxy that could be spawned in minutes that could allow us to run it through the virtual “ringer” would be extremely beneficial to our product development and testing. Feature iteration, User experience, and load testing for us would traditionally require massive investment in inflexible hardware. We chose an alternate path, a malleable, iterative environment that we believe is poised to have a massive impact on the development of robotic hardware, applications, and in our case infrastructure. Let’s talk about robot simulators and how we built our robot simulator with Unity.

Robotic simulators have their place

When you think of robot simulators, you probably think Gazebo and V-Rep — or maybe Amazon’s new(er) RoboMaker. These solutions provide highly-accurate physics engines for the robust, testing and design of robots, and their associated applications. Essentially, these can be considered developer tools though because if you want to use them, you’ll need to be an engineer. In order to create a robust simulation, you’ll need detailed requirements and ample time. In other words, it’s another development project. That didn’t feel right. So we kept brainstorming. Formant is comprised of roboticists and software engineers, but we also have deep experience in design and professional content creation tools. We’re as familiar with After Effects as we are with Kafka. It’s that omni-disciplinarian orientation that led us to consider a game engine; Unity, as the platform for our robot digital doubles and robot simulator. The Unity engine provides a malleable, approachable environment for improving our platform.

Backed by an army of devoted world builders

Unity has become a ubiquitous platform for 2D and 3D, AR, and VR interactive content. It has more than 5,000,000 registered developers, can be run on 25+ platforms, has an asset store with a million+ objects (with over 1,000,000 monthly downloads). Unity is a juggernaut. It is technically a proprietary platform, but it’s a community, a far larger community than we find in the robotics domain; that said we think both worlds overlap significantly in terms of requirements. Both require the ability to visualize rich multi-dimensional data and visualization. Both are dependent on physics, and require algorithms for things like inverse kinematics, path planning, and AI. Unity represents the way new creative technologists like to work. It’s collaborative, malleable, extensible, iterative… You can copy/borrow/leverage existing assets from other people’s work, bend situations, experiment, test, iterate… This is what game developers do all day long. Furthermore, it’s how they think.

Unity fosters a focus on ‘time to delivery’ by providing prefabs: color pickers, physics engines, rendering engines, state engines, event handlers, advanced IO, and more. The takeaway here is that it’s easy to build a Unity robot simulator… Quickly.

Simulate your customers’ pain points — iteratively

Many of our robotics customers concerned with fleet management are operating in the logistics/warehousing space so we chose to model a warehouse environment as our first ‘Level’. We were able to construct the digital environment, create waypoints, goals (i.e. AGV charging stations, sorting stations) and obstacles in short order. We even dialed up a few humans with plug and play walk cycles and AI to create variability and test safety scenarios. Through Unity’s visual, real-time tools we were able to modify the environment on the fly to evaluate new features. The following screenshot is Formant’s robot fleet operations interface. In this case, the user is observing the warehouse ‘game’ which is continuously publishing data to our cloud back end. When a robot encounters an issue, it looks like / smells like a real system from both the user’s perspective and from our product’s perspective. The scale of in-game fleet deployment and its reliability are all within our control.

We have built a rich testing environment that allows us to test multiple aspects of our platform’s front and back-end performance. How good are our tools for fleet administration? How quickly can we allow someone to root cause an engineering problem? How do we perform with massive amounts of rich sensor data? What are our latencies? What’s the right amount of information to present to the user? Are our integrations performant? The following are a couple of examples of how we built pieces of our simulation “game”.

Create-a-cam: the advantages of a virtualized sensor

We wanted to test our cloud ingestion pipeline throughput so we focused on imagery since it is a particularly taxing data stream. We wanted to be able to test different lenses, image resolution, signal-to-noise ratios, and more. IRL? There’s no way we could have tested more than a handful of permutations.

A core value proposition of our product is how we analyze and present highly visual data (video, point clouds, 6DOF pose, etc.) to human operators. It was key to get this right from both a technology and a useability perspective. In Unity, we could iterate. We were able to flip, defocus, scale, add latency, add noise, etc. with a few clicks. For example, using a camera effects package like Colorful FX, you can apply video effects to simulate lens idiosyncrasies like chromatic aberration, or barrel distortion.

Example of image post-processing interface (colorful FX) available in asset store

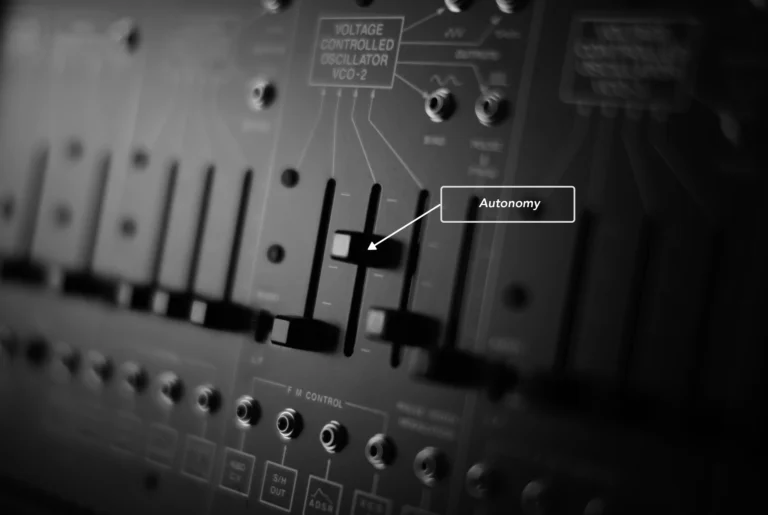

We created sliders for resolution, focal length, and frame rate. If we want fine grade control, we could introduce “noise” via a particle system, or even write a custom shader. The shader would be an example of developing custom code, but at least we’re focussing our precious engineering resources at a single aspect of the problem. Modeling, AI, lighting, data-piping, IO and physics are handled by the engine already.

Simulating alerts: steal (I mean leverage) everything you can

We work very hard to allow humans to manage robotics fleets with ease, and a key objective is to ensure that one operator can manage as many robots as possible. This comes down to ensuring that the right person receives the right message at the right time. We call this Alerting and Interventions. As you might imagine, some of the most critical messages we send are safety-related. We created a sandbox to get this right.

The task

Alert a human operator when a robot detects a human within its operational zone. Creating this scenario involved importing an existing robot model and building a tuneable virtual sensor suite (lidar, video, pose, telemetry).

Once the ‘on-robot’ sensor suite was deployed, We added additional “security” POV cameras for situational awareness and installed our telemetry ingestion agent on the virtual robot to stream it’s data in real-time to our cloud backed. We downloaded a human model from Mixamo’s online character library, applied built-in AI and asked him to walk about the warehouse. To generate our “human detected” alert, we employed code from Unity’s 3d game kit to tell the system when the human character is within the field of view of the camera sensor. In a gaming context, his standard NPC (non player character) logic model is typical to alert game elements and their AI to the presence of the gamer, and served well to get our bot to recognize a human and send up a smoke signal.

Configurable detector script as prefab in Unity

With these components in place, we had a testing environment complete with robotic “digital double” that would allow us to test and experiment at scale. In addition to being immediately modifiable, the virtualized robotic hardware was as reliable (or unreliable for that matter) as we needed it to be. Notably, the only thing we had to create from scratch was the virtual lidar sensor everything else was off the shelf, either built into the engine or downloaded from a third party.

85% of this work was about integrating found assets. We didn’t write custom code. We “integrated” others’ work. We modified it to suit our testing, and will likely change it again tomorrow (i.e. dim the warehouse lights to test the latest object detection, or navigation algos). Due to graphical game engine interfaces, these test modifications largely depend on the creativity of the designer, or product manager and not the availability of a costly engineering resource.

Simulators: not just for developers

Game engines have steadily matured over decades of development, attempting to approximate the physical world. This results in the record number of triple-A titles produced each year that contain astounding real-life detail and engrossing gameplay. Just as no visual effects studio recreates animation or compositing tools, game developers leverage tools like Unity to create worlds… The tools are mature, malleable, and highly efficient.

All with this great approximation of the real world (an approximation of hardware that is as reliable, or unreliable as you want…) — robotics is difficult, it’s dangerous, and it’s expensive. We should all look to these tools to accelerate our development and improve our products, as we did with our Unity robot simulator. The simulator and the simulation are converging. And that’s great for robotics companies.

We believe the robotics world will leverage these tools and these worlds to test scenarios, find edge cases, develop hardware, software, and train operators.

Let’s have a play, Shall we?

To learn more about Formant, get a demo.