If you are working in the agricultural robotics industry, chances are that you have heard of or run into Cal/OSHA 3441(b). According to the website of California’s Department of Industrial Relations, “All self-propelled equipment shall, when under its own power and in motion, have an operator stationed at the vehicular controls.”

In addition to the above stipulation, it also provides exception if all of the following are met:

- The operator has a good view of the course of travel of the equipment and any employees in the immediate vicinity.

- The steering controls, when provided, and the brake and throttle controls are extended within easy reach of the operator’s station.

- The operator is not over 10 feet away from such controls and does not have to climb over or onto the equipment or other obstacles to operate the controls.

- The equipment is not traveling at over two miles per hour ground speed.

As a robotics data platform focused on helping companies accelerate their deployment and scale their fleet, we have quite a few partners in the agricultural space, and some of them were running into the same issue that Monarch Tractor ran into with the section Title 8, Section 3441(b) in Cal/OSHA.

For agricultural robotics companies, these regulations require that self-propelled equipment is limited to a 1:1 operator-to-equipment ratio, essentially making it more expensive than a purely human-operated machine.

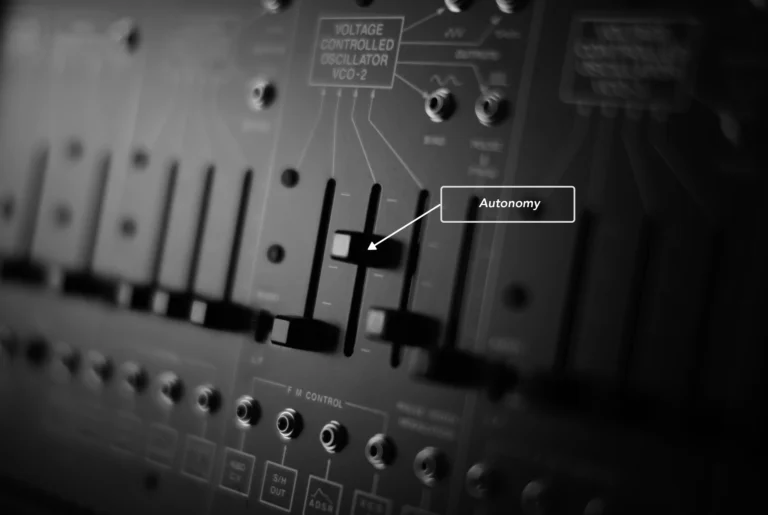

In order to overcome this limitation, we’ve worked to develop a solution that addresses these Cal/OSHA robot regulations for existing and future customers. This solution would allow the operator to have a real-time view of the course of travel of the equipment while having easy access to the brake and throttle controls – all without needing to climb over onto the equipment.

Let’s take a deeper look…

There were primarily two critical requirements for the project:

- Reliable network connectivity between the equipment and the operator controls

- Real-time video and control of the equipment

Given that farm fields have pretty spotty internet connection, the first part of the solution is figuring out a network solution that works for large fields.

Selecting the right network solution

We worked closely with some of our customers to evaluate a few options for network connectivity.

Option #1 – Hardware video solution

We expected this to take less than a week to test. The goal of this approach was to de-risk the end-to-end solution and get something stood up soon to make progress – While this is not meant to be a perfect solution, we knew it would be a quick incremental, iterative solution where the operator might have had to move the truck as the equipment moved.

We had the option to either try a cheaper (priced around $2K), faster option like Accsoon CineEye 2 Pro that might not satisfy the 500m requirement or test more expensive options like Teradek Bolt 4K Max that provide 1500 meters range but the total cost would have been above $10K.

The primary drawback of the above approach, however, is that while the solutions would have provided a one-stop real-time view into the camera of the equipment, the controls would have had to be built separately.

Option #2 – IP mesh network

We were looking to start fast, cheap with low risk, but we knew going in that option #1 was not a viable end-to-end solution. Hence, we started to look into solutions used in other industries that enabled voice, video and data for real-time command and control. We reviewed mesh network solutions like DTC, and Rajant as other possible solutions.

Each seemed to have been fine, but it was a little outside the comfort zone of the budget we wanted to hit with the first iteration.

Option #3 – airMAX

AirMax Protocol (based on TDMA) was designed for outdoors, and gives priority to audio and video packets, which matched the requirements that we had. Based on cost analysis and recommendations from experts, one of the customers had already evaluated Ubiquity airMAX in some of the farms they were operating on and had found its range and latency appropriate for their needs. Therefore it was decided that we would move forward with airMAX as the option for the first iteration of the test.

Real-time video and command interface

The end goal of the end-to-end solution was to have 1 operator per 4 equipment. For this to work, an operator would have to be present in the vicinity of the equipment and field. They also had to be able to view, command and control all four equipment in real-time. The solution had the following requirements:

- It needed to be reliable, and real-time.

- The interface itself had to be user-friendly to support command and control of 4 devices.

- It had to run off a display from the truck in the farm.

- In addition to streaming the video over Ubiquity airMAX, the solution needed to store video locally so that it can be audited for analysis and improvements later

When we first set out on this project, we knew that the cameras mounted to the robot were IP cameras. Because the cameras were on the same network as the computer on the robot (the airMAX network), we assumed video could be streamed using normal IP camera methods.

Method 1: IP Camera webpage

When an IP camera is on the same network as the receiving device, the receiving device can just go to a webpage it hosts, and view the video. We attempted to make an iframe that encapsulated this video webpage. This worked relatively well, except because the airMAX network sometimes will drop too many packets, the video feed would just drop out, and never recover

Method 2: RTSP stream

Similarly, with an IP camera on the same network as the receiving device, it can alternatively open up an RTSP stream to the IP camera. We tried to host an RTSP proxy server on the receiving device to connect to the RTSP stream hosted by the IP camera and serve it to the application running on the receiving device. Again the insufficient stability in the network made this occasionally freeze, but more often build up a significant lag that made it unusable.

Obviously, neither of these methods were going to work for this use case due to strict reliability and real-time requirements.

Method 3: Web Real-Time Communications

Ultimately, the architecture we used was the formant-agent, running on the same computer (and the same local network) as the IP camera, would connect to the RTSP stream hosted by the camera, using Formant Local Signaling to establish a peer-to-peer local connection between the formant-agent and the webapp on the IPad, we streamed the video directly to the webapp over the formant realtime video stack.

We used webRTC, Web Real-Time Communications, an open-source project that enables real-time voice, text, and video communications capabilities between web browsers and devices for low-latency audio, video, and data communications between the operator and the equipment.

Our team had built a video communication layer, based on WebRTC, for our Teleop platform, often used by Operators with a wired network connection, and a robot with LTE. This real-time video stack has multiple optimizations and fail safes to ensure high quality and low latency.

WebRTC also has MediaStreams – a data channel specifically designed for real-time video communication that most of the world used without knowing during the COVID-19 lockdown. With MediaStreams, WebRTC handles the video encoding, decoding, adaptive bitrate, reestablishing of broken sessions, and more.

Since the MediaStreams didn’t give us guarantees over latency, the most important requirement when operating a robot remotely, we decided to build it ourselves. Our real-time video stack on top of WebRTC’s DataChannels gave us complete control over latency and quality of service for each packet we sent. This also gave us complete control over the video encoding and decoding, including the way we adapt bitrate, how we handle lost messages, and how we re-establish broken sessions. During the building of this system we walked a Boston Dynamics Spot around San Francisco for more than 500 hours to ensure our real-time video stack performs its best, even with the worst network connectivity.

Formant Local Signaling

- In order to establish a WebRTC connection between two peers (robot and operator), typically a Signaling Server is used. This is a server based in the cloud that each peer can communicate with, share its networking information, and figure out how to communicate with the peer it wants to connect to.

- Because in this environment we knew we wouldn’t be guaranteed access to the internet, we built a system that allowed the Operator application to signal directly to the agent, in order to establish the connection without the need for access to the cloud.

This resulted in a robust, low latency, high-quality video stream from the robot, presented on the iPad that was consumable by the operator, and establishable from up to 1000m away, without external internet.

The Final Solution

Using airMAX, local webRTC, and signaling server, we were able to deliver a real-time control interface in a browser that can enable robotics companies in agriculture to operate machines with a 4:1 operator-to-robot ratio using an iPad.

Although we have not yet confirmed with Cal/OSHA that this solution meets all their requirements, we are confident that we have at least paved the way towards an innovative solution to satisfy the requirements.

Need help tackling other issues in robotic farming? View our webinar, “Overcoming Common Challenges In Robotic Farming” for free, or get a tailored demo of Formant.