TL;DR: We don’t wait for downtime. Our agent watches for signals—early patterns that precede incidents—then spins up a generative investigation that adapts to the situation, collaborates with your team, and acts within explicit autonomy guardrails. The result: fewer incidents, faster resolution, and expertise that compounds across your physical operations.

The 2:00 a.m. Difference

At 2:00 a.m., an incident forces everyone out of bed. Production halts. Customers wait. A root cause analysis starts under pressure.

At 2:00 p.m., a signal quietly appears: a drift in a sensor, a pattern in logs, a threshold crossing that—based on history—often precedes downtime in two to three weeks. No sirens. No panic. Just a nudge to look closer.

A signal is a stitch in time to save you nine.

Why “Signal,” Not “Incident”

The word choice is deliberate. Incident implies damage already done—lost throughput, missed SLAs, unhappy customers. A signal impliesSignal implies potential that something might be trending the wrong way. Catching it early lets you turn emergency firefighting into proactive operations.

Instead of “Robot A is down,” you get “Robot A is exhibiting the pattern that usually leads to downtime in ~2–3 weeks.”

The difference between a calm inspection and a crisis.

Generative Investigations (Adaptive by Design)

Traditional pipelines are brittle. Real facilities aren’t. For every signal, our agent composes the steps it needs on the fly:

Analyze logs → check sensor drift → validate with historical cohorts → recommend preventive maintenance

Or: Run targeted diagnostic → confirm resolution

Different signals demand different playbooks. The point isn’t to lock in one perfect flow—it’s to generate the right one for this context.

A Shared Status Model For Transparency

Adaptive steps, consistent progress language:

Investigating — attribute the pattern, form root‑cause hypotheses

Planning — propose a path (actions, owners, timing, rollback)

Resolving — execute actions, verify outcomes

Complete — capture learnings, update autonomy

Signal detected

└─► Investigating: pattern attribution, root‑cause hypotheses

└─► Planning: chosen path, rollback, who/when

└─► Resolving: execute, validate, record outcome

└─► Complete: learnings codified, autonomy updated

That shared backbone makes it easy to track dozens of parallel investigations and coordinate work across teams.

Collaborative Intelligence: Operator + Agent

We don’t pretend the agent can see everything. When it can’t, it asks:

“I see a pressure anomaly that could indicate a micro‑leak, but I can’t see the equipment. Please post a photo of the valve train and confirm if there’s moisture at junction #2.”

The AI handles large‑scale pattern recognition; but needs help with perception, safety, and judgment. Together, they resolve issues the agent couldn’t solve alone; and faster than the operator could solve alone.

Escalation with Context (Never a Dumb Hand‑Off)

The agent operates within a mandate and a confidence threshold. Inside those bounds, it acts autonomously. Outside them, it escalates with a crisp brief:

What I found: signal → likely cause

What I did: checks run, diagnostics, mitigations

What I’m unsure about: gaps, risks

What I need from you: e.g., “visual confirm gasket moisture,” “approve firmware rollback,” “schedule bearing inspection”

This prevents context‑free paging and shortens mean‑time‑to‑decision.

Trust, Guardrails, and the Learning Loop

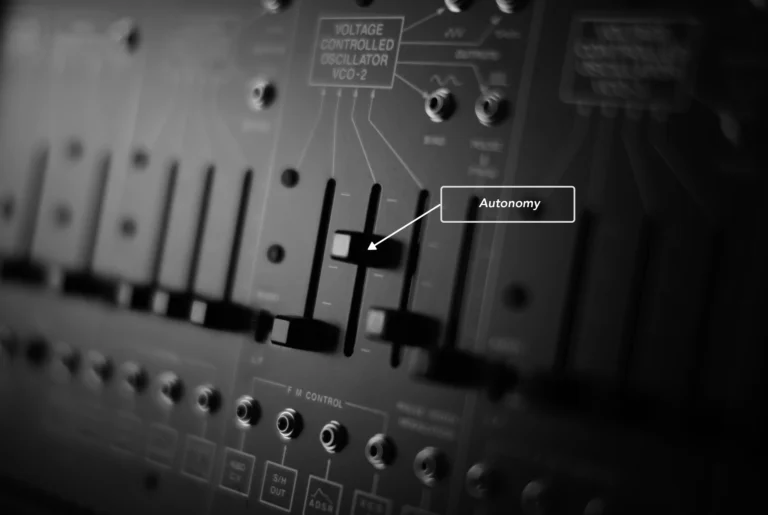

Autonomy guardrails: allowed actions, scope of change, time windows, and rollback plans are explicit. Safety checks ensure actions are reversible and bounded.

Confidence thresholds: the agent quantifies uncertainty. Below threshold → escalate. Above threshold → proceed with logging and verification.

Learning loop: every investigation writes back what worked, what didn’t, and which steps were decisive. Over time, more issues qualify for safe auto‑resolution, and escalations become rarer—and sharper.

Philosophy: We believe in augmentation, not automation. Operators, always in control, stay in the loop by design, and the agent earns trust incrementally.

What Your Team Actually Sees

1) Live Signals Feed: prioritized by risk, impact window, and confidence, with plain‑language summaries and drill‑downs to logs/metrics/history.

2) Investigation Cards: each in one of four statuses. Examples:

Investigating: “Telemetry on Units R14–R17 matches pre‑failure pattern observed 7× last quarter.”

Planning: “Propose: recalibrate IMU on R14 today; observe R15–R17 for 48h. Rollback in place.”

Resolving: “Executing calibration on R14; monitoring post‑action drift.”

Complete: “Pattern neutralized; added ‘IMU recal temp‑window’ heuristic to library.”

3) Actionable Escalations: concrete asks with context: approvals, photos, quick on‑site checks.

4) Weekly Digest: signals caught early, time‑to‑resolution, incidents prevented, and newly auto‑resolvable classes added.

Example: End‑to‑End in the Wild

Signal: Conveyor torque variance rising on Line 3; 82% similarity to a known pre‑failure pattern (typical failure T+10–14 days).

Investigating: Correlate torque with ambient temperature, belt wear logs, and jam events; compare across sister lines.

Planning: Recommend belt tension check and targeted lubrication now; schedule bearing inspection during Friday changeover.

Resolving: Execute checks; variance returns to baseline; set 7‑day watch.

Complete: Codify “torque‑temp‑wear triad” heuristic; raise confidence for future auto‑resolution.

Outcome: No downtime, no scramble—just a quiet save.

The Outcomes That Matter

Fewer incidents: because you catch precursors days or weeks in advance.

Faster resolution: because root‑cause work is done before escalation.

Distributed expertise: the one expert’s trick becomes fleet knowledge.

Expanding autonomy: safe auto‑resolutions grow over time as trust accumulates.

Want to transform your operations from reactive to proactive? Come talk to us!